A course is a sequence of student experiences, made from three different types of elements. This section explains what the elements are.

A good skill course has:

- Lessons: content that explains how to do tasks

- Exercises: tasks that students do

- Relationships: instructors help students learn, students help each other

SCDM courses are flipped. There are no lectures. Students read and watch lessons outside of class. Class time is for working on tasks, and for cheering.

Each course has a website, with the lessons, exercises, and tools for the course. Students submit their work, and get feedback through the site. All of the screenshots below are from Skilling, but you could use other software.

Lessons

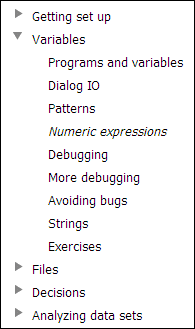

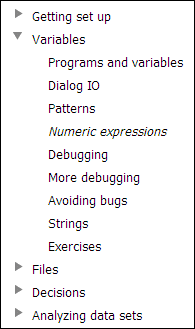

The lessons are a SCDM course’s textbook. They’re in a lesson tree, like this one from a programming course:

A “module” is a chunk of the course. There are five modules in this course. Each module has lessons. The Variables module has eight lessons. The last entry – Exercises – isn’t a lesson. More later.

The course helps students learn how to write programs that analyze data sets. The last module – Analyzing data sets – is about the course’s target tasks. The modules before that help students learn what they need to know, before the lessons in the last module will make sense.

Lesson structure

Most of my lessons have a similar structure:

- Activate prior knowledge

- How the lesson fits with the course

- Lesson body

- Summary

- Exercise

Lessons start by activating prior knowledge. Human memory works by hooking new knowledge to existing knowledge. Connections are influenced by what you’ve been thinking about lately. Say you’ve been thinking about concept A, and immediately learn about B. Your memory will link A and B. In the future, if you think about A again, there’s a good chance that B will pop into your mind. The spreading activation model has been standard since the ’70s.

We can take advantage of this priming effect when writing lessons. Suppose we have a lesson about concept A, followed by a lesson about concept B. If we want students to hook A and B together, we start the lesson on B, by reminding them about A. That’s activating prior knowledge (APK).

You can APK with text…

In the last lesson, we talked about A.

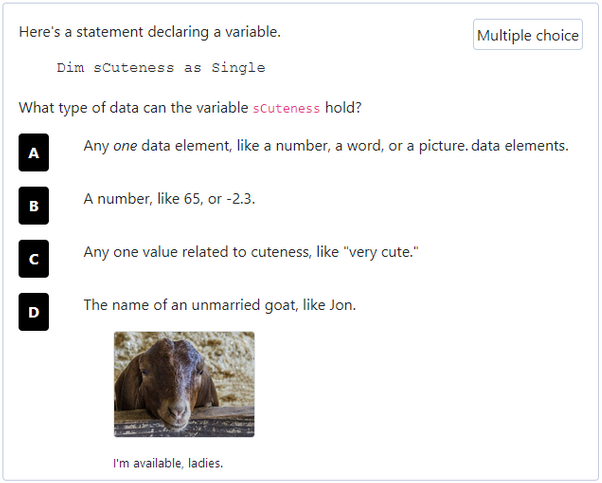

Even better, use a multiple-choice question ( ). Answering an requires deeper processing than reading text.

(The goat is the Official Animal of the course this is from.)

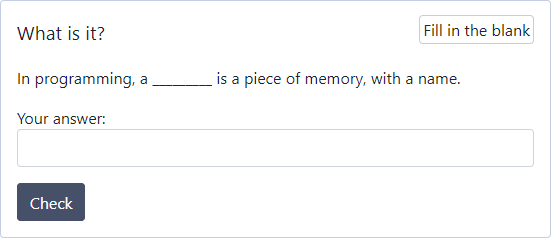

Better still, you can ask a fill-in-the-blank ( ) question.

MCQs require students to recognize the right answer. That is, the right answer is given, and students pick it from a list. FiBs require students to recall the right answer. Recall ( ) encourages deeper processing than recognition ( ).

APK items show up in the middle of my lessons, too, especially for long lessons. Memory activations at the start of a long lesson might have faded part way through the lesson.

So, where are we? We have a sequence of lessons, most with a similar structure:

- Activate prior knowledge DONE

- How the lesson fits with the course

- Lesson body

- Summary

- Exercise

After APK, I usually give the lesson’s goals, placing the lesson in the context of the module and/or course. Then there’s the main body of the lesson, where the good stuff is, followed by a summary, and an exercise.

The exercise

Before we get to the body, let’s talk about the exercise, and transfer distance. As you might guess, the exercise at the end of a lesson uses the schemas, procedures, and facts of the lesson.

One issue is that students know which schemas to use for the exercise. However, in the real world, they’ll have to work that out for themselves. What to do?

Here’s that lesson tree again.

The Variables module has eight lessons. The last one is Strings.

After Strings, there’s another item: Exercises. This isn’t a lesson, but a bunch of, er, exercises. The exercises are outside of a lesson context. Students have to work out which concepts to use.

In my programming courses, the concepts needed for the exercises can be from the current module, or any prior module of the course. The exercises at the end of the final module can use information from the entire course.

Programming courses tend to be cumulative like this. That isn’t an artifact of the course, but a property of the domain itself.

Other domains are different. For example, in statistics, you can work out correlation coefficients without using ANOVA, and vice versa. So, exercises in the correlation module might not use concepts from the ANOVA module.

The body

We’re talking about lessons, the first of three SCDM course elements. Many of my lessons have a similar structure.

- Activate prior knowledge (APK)

- How the lesson fits with the course

- Lesson body

- Summary

- Exercise

The body is the meat of the lesson. It’s made up of several elements in different orders and combinations:

- Direct explanations

- Worked examples

- Reflection questions

- Simulations

Direct explanations

Direct explanations are what you expect. “Here’s how you…”

Maybe your but-face is saying, “But direct explanation is so unhep!” Direct explanations make good use of students’ time. Direct explanations are efficient, and effective. More so than discovery, experiential, or inquiry-based learning.

Save discovery for advanced classes, where students already have a strong foundation. Remember, SCDM is for intro courses, where students don’t know enough to explore efficiently.

The classic paper about this is from 2006. The title says it all: “Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching.” By Paul Kirschner, John Sweller, and Richard Clark. Yeah, them. If you’re into learning science, you know their names.

Worked examples

Worked examples are what you think they are: someone doing a task, and explaining as they go. I use them a lot.

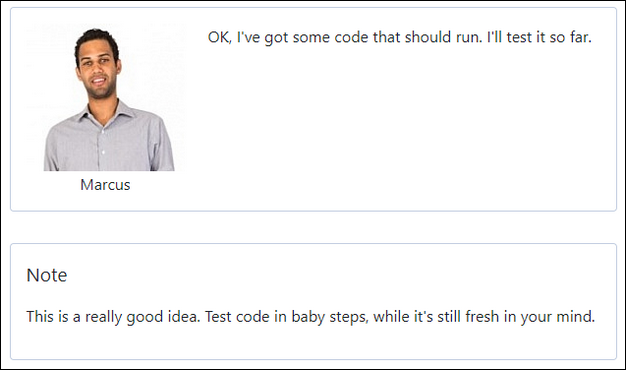

Two things about my worked examples. First, I often use characters. Barsha Chetia writes about various uses for characters. My usage is quite specific: characters are fake students, going through the course with the real students. Characters are photographs, headshots with different expressions. Happy, confused, skeptical, etc. I tend to use the same four in my courses: Adela, Georgina, Marcus, and Ray. You’ll meet them soon.

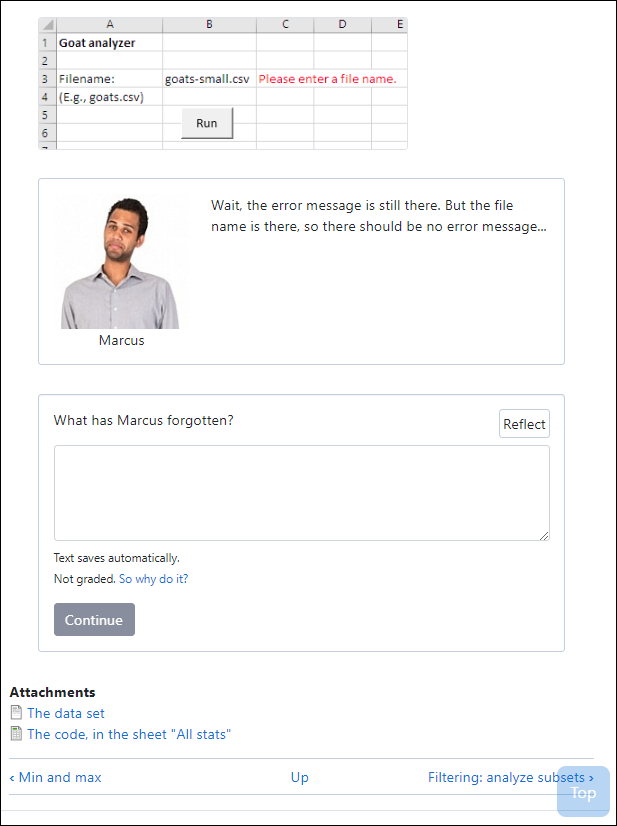

Characters have several uses, like asking questions, and encouraging each other. In worked examples, they model good practice, like breaking a task into pieces, and tackling one piece at a time (decomposition). Of course, the characters aren’t perfect. Sometimes they mess up, and have to figure out where they went wrong.

Characters approach problems in different ways. For example, Ray likes to design programs with paper and pencil, using boxes and arrows. Georgina prefers to type high-level comments in a code editor, then flesh them out.

Characters have emotional responses. This is from the end of a lesson:

Ray is the worst programmer in the group. (Adela, a latina, is the best.) Ray is nervous about being singled out, but, with a little help from his friends, he gets the job done.

I also annotate worked examples, noting good practices, and things to avoid. An example:

So, where are we? We’re talking about lessons, showing students how to use concepts (schemas, procedures, and facts) to do tasks. We’re looking at things I commonly do in lesson bodies (in addition to APK and other stuff). I use direct explanations, and a lot of worked examples. The worked examples use characters, and annotations.

Reflection questions

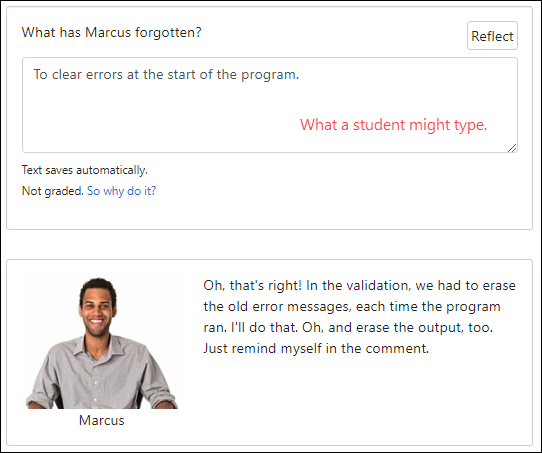

I also use reflection questions. Here’s an example:

Students type their answers into the textarea. Notice that the content below the reflection question is hidden. What you see in the screenshot is normal end-of-lesson stuff.

After students type their answer and press Continue, a character gives an answer.

When students access the lesson again, their earlier responses should still be there (that’s what Skilling does). Students can also get a report of all of their responses across all lessons.

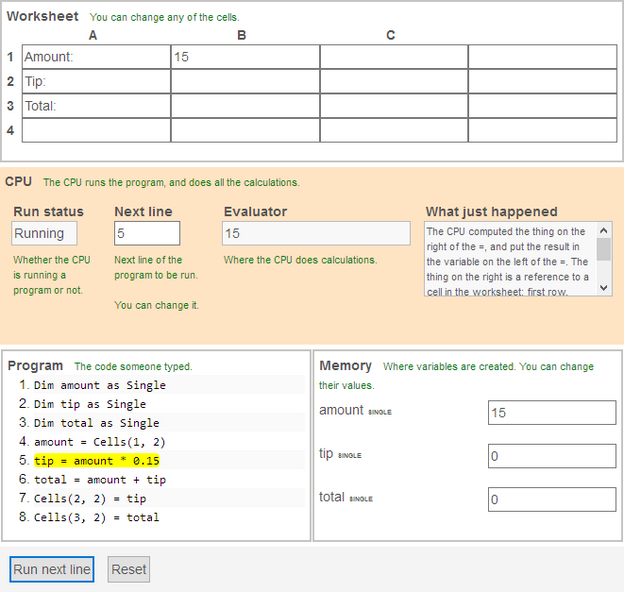

Simulations

Here’s a simulation from a programming course:

Students can run the program one line at a time, and watch what the CPU does.

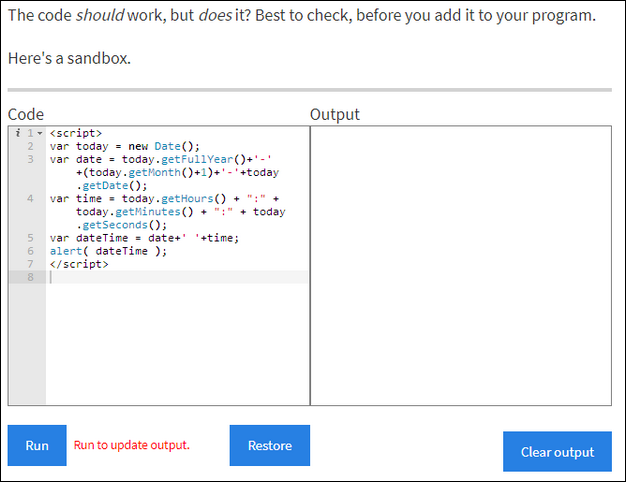

Sometimes, I’ve added “sandboxes” to programming courses, so students can test code directly in lessons. For example:

Simulations and reflection questions go together nicely. Put a simulation widget in a lesson, and ask: “What would happen if you…?”

Simulations are great in statistics courses. Let students watch the central limit theorem in action, as they mess with samples from different distributions.

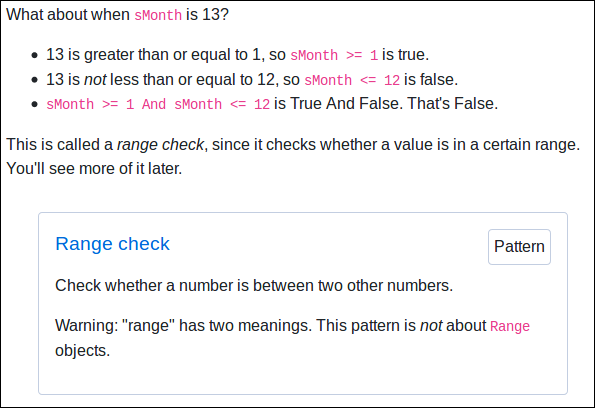

Lessons and concepts

The four methods – direct explanations, worked examples, reflection, and simulations – are used to explain concepts, that is, schemas, procedures, and facts. For example, here the range check pattern (a pattern is a schema type, remember) is introduced in a direct explanation:

Patterns are explicit in a SCDM course. Patterns are pedagogical objects, that exist separately from the lessons where they are introduced. Students are encouraged to think of patterns as tools they can use in problem solving.

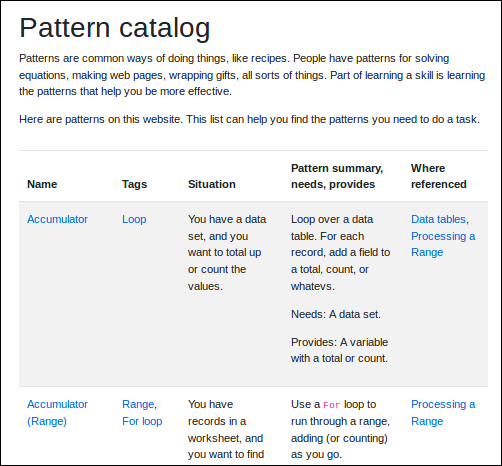

To help students think this way, Skilling gathers the patterns across a course in a pattern catalog:

When students do tasks outside the context of a particular lesson (e.g., exercises at the end of a module, or a project in another course), the catalog reminds students of the patterns they have learned.

There are lists of principles and models, too.

Customizing

It might help to customize the direct explanations, worked examples, and such. For example, here’s a lesson tree again.

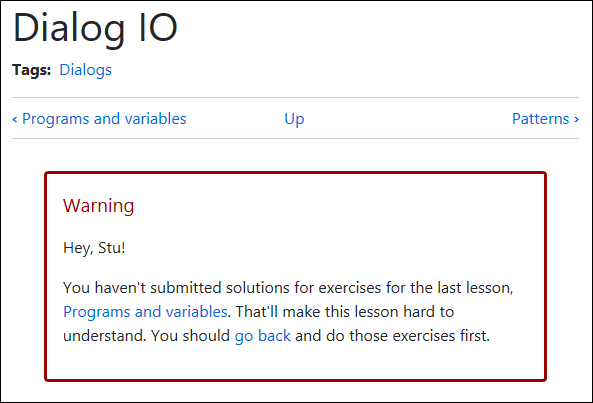

The lessons are meant to be done in sequence. That means doing exercises, as well as reading. So, if a student starts reading Dialog IO without having done the exercises for Programs and variables, they should see:

Skilling lets you add conditions to… well, lots of things. An author could add this to a lesson:

annotation. type=warning condition=![exercise:submitted:exercise_custom_tip_amount] Hey, [current-user:field_first_name:value]! You haven't submitted solutions for exercises for the last lesson, "Programs and variables":/lesson/programs-and-variables. That'll make this lesson hard to understand. You should "go back":/lesson/programs-and-variables and do those exercises first. /annotation.

An annotation is a note, warning, suggestion, cool thing… whatever makes sense for the course. The condition is where the magic is. The exclamation mark (!) means not. So if the student has not submitted a solution for the exercise exercise_custom_tip_amount (each exercise has an internal name), then show the annotation.

The logged in student is called Stu Dent. You can see how the annotation includes Stu’s first name in the message.

BTW, authors type this in themselves. They’re the ones who know what makes sense pedagogically. Authors can add conditions, without any help from programmers.

You can use conditions for other things, too. For example, if a student has a learning disability, you can remind them of special services that might help with the course. If you have many students from China, you can add explanations of special terms. In Chinese, of course.

You can even do academic advising. For example, if a student in a statistics course has completed 80% of the exercises just four weeks into the semester, you might show them a message like this:

Hey, Sarah! It looks like you’re really into this course. That’s great!

If you want, ask your advisor about the data analytics major. You might like it.

You can customize lessons based on a student’s performance, major, interests, preparation… almost anything you can store data about.

So far…

We want to help students learn schemas, procedures, and facts, and know how to use them to do tasks. Lessons, exercises, and instructors help. Lessons use direct explanations, worked examples, reflection questions, and simulations. Lessons can start with MCQs and FiBs to prime memory. Patterns are explicit, and listed in a pattern catalog.

Let’s proceed. Here are the parts of a SCDM course:

- Lessons: content that explains how to do tasks DONE

- Exercises: tasks that students do UP NEXT

- Relationships: instructors help students learn, students help each other

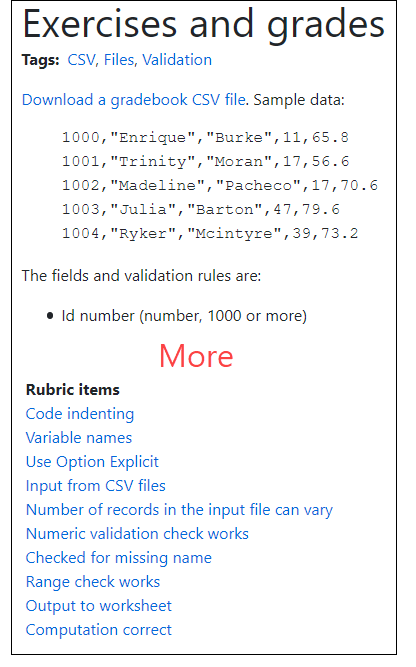

Exercises

A SCDM course has many small-stakes exercises, either whole- or part-task. Students get individual formative feedback, with chances to resubmit incomplete work.

Let’s break that down.

Many exercises

Having many exercises means that each new exercise adds only a little cognitive complexity. It’s like the difference between a staircase with three big stairs, and one with eleven small stairs. They both get you to the same place, but the one with eleven stairs is easier to climb. More people will reach the top.

My courses have about 40 exercises each. Students get lots of practice, with success after success. It also creates a heavy grading load, but there are ways to handle that, as you’ll see.

Small stakes

Each exercise is small-stakes. If a student misses some of them, it’s not the end of the world. Students also can experiment, without make-or-break consequences.

Whole- and part-task

There are whole-task and part-task exercises. Whole tasks are like those students will face in the real world. They have a context, and a goal that makes sense in that context. For example, a whole task might be “Here’s some data on product returns. Are returns in the midwest different from returns in the south?”

A part-task is a fragment of a task, used to do a whole task. For example, for the returns data task, two part tasks might be:

- Import data into Excel.

- Use a filter to separate data into subsamples.

Most exercises in my programming courses are whole-task. The tasks are simple to start with, like computing the tip for a meal at a restaurant. Although it’s easy, it’s a task people actually do.

Part-task exercises help with important subtasks that are easily isolated. Practicing those steps separately helps ensure that students don’t get stuck when they’re doing whole tasks. With enough practice, students complete subtasks automatically, with little cognitive load.

An example from a programming course is evaluating numeric expressions. Programs use numeric expressions a lot. The more easily students can work with them, the better. For example:

“Individual formative feedback” means that every student gets explanatory feedback on every rubric item for every exercise, personalized for their own work. For example, for the “Numeric validation check works” item, a student might be told:

Yay! The numeric data check works.

Or maybe:

Check that the data is numeric. See the numeric validation lesson.

Or perhaps:

Numeric validation check is on the wrong field!

You might be thinking:

Kieran, you lovable kook. That means thousands of individual decisions on student work, every semester! That’s not feasible.

Two things. First, it is feasible. We just need the right tools to make efficient workflows. I’ve been doing it for years. More later.

Second, remember the goal: help students learn problem-solving skills. To do this, we must give formative feedback at scale. Skipping individual formative feedback is not an option. If you don’t give formative feedback, you forfeit any right to complain about students.

Resubmission

One last part:

A good skills course has many small-stakes exercises, either whole- or part-task. Students get individual formative feedback, with chances to resubmit incomplete work.

In my courses, student submissions are marked as either complete, or not. Submissions have to hit every item on the exercise’s rubric to be marked complete.

If submissions are not complete, students get a chance to improve their work, based on the feedback. Students have a reason to read feedback, and improve.

There’s your but-face again. “But that makes the course too easy!” The goal isn’t to make a course hard. It’s to help people learn skills. Resubmission helps learning, so it’s good practice.

“But that means everyone gets an A!”

No. Be more precise in your thinking. Tasks-for-learning and tasks-for-evaluating-students are different. I give exercises for learning, and exams for evaluation. More later.

Up next

We’re working through the three elements of a SCDM course.

- Lessons: content that explains how to do tasks DONE

- Exercises: tasks that students do DONE

- Relationships: instructors help students learn, students help each other UP NEXT

Relationships

Remember that SCDM skills courses are flipped. No lectures.

There are still synchronous sessions, when students and instructors meet. “Meet” can be face-to-face, in a traditional classroom. It can be virtual, using services like Webex or Zoom. Meetings can be required, or optional. Whatever the case, instructors need to be available.

So, what do instructors do?

Emotional tone and rapport

One of an instructor’s most important jobs is to give each course a positive emotional tone. Not “make it funny,” or “make it easy.” I teach programming, and programming is frustrating. Argh! My computer hates me with all its being. At least, it seems that way.

Lessons and exercises can be engineered for emotional engagement, with characters, Official Animals, and links to students’ interests. Still, it’s instructors who add high-touch, joyful elements to their courses. I get to know my students’ names, and what they like. We chat, tell jokes, laugh… we have fun together.

Troubleshoot

Students get stuck as they do tasks. Different students get stuck on different things. Each student needs help on their own particular stuckness.

Instructors help students find and fix problems with their code, analyses, database queries, or whatever. They work with students one-on-one, on each person’s particular issues.

Note the careful wording above. Instructors don’t find and fix problems in student code. Instructors help students find and fix problems. Be ready to sit and watch students struggle. You’ll want to jump in, but don’t. Struggle is good, to a point.

Explain

Sometimes students get stuck because they misunderstand concepts. Instructors can help.

I often ask students to explain their code to me. “What does that line do?” This helps me pinpoint misconceptions.

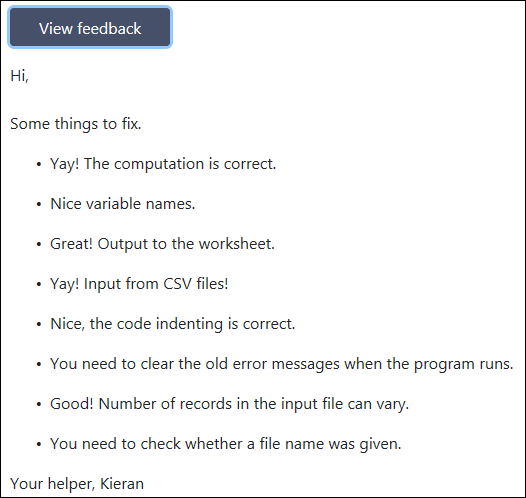

Feedback

Students need lots of feedback. Later, we’ll see how Skilling’s feedback system is fast and efficient. Still, the work needs to be done. Instructors either do it themselves, or work with graders.

For each submission, students get a list of things they did right, and things they need to improve. They might get suggestions for how to improve, and references to material they should study. Here’s some sample feedback:

For my intro courses, I hire a grader. Look for someone who is reliable, and gives feedback within a day or two. That’s more important than expertise. You don’t need a Ph.D. (or any degree) to grade intro programs.

Evaluate

Instructors evaluate students, giving As, Bs, etc. Summative evaluation judges the students themselves, giving one number that’s supposed to capture their expertise.

“Supposed to,” but… well, it’s complicated. One number can’t fully capture someone’s ability to use schemas, procedures, and facts to do tasks in context.

Skilling’s feedback system doesn’t give grades, as such. In fact, the system doesn’t even have the concepts of weights and scores. That seems strange at first, until you remember the goal: help students improve.

The only grade-like-thing is whether students completed the exercise, or not. You get to decide what “complete” means. If you want a mastery course, you can say that students only complete an exercise when they meet every rubric. That’s what I do. More later on the feedback system.

To determine course grades, I give students exams. I grade them myself, rather than leaving them to the grader. I use the feedback system, and add some manual score allocation to the workflow. I don’t claim that my judging-students system is perfect, just that it doesn’t suck. Compared to what I’ve seen in my long professoring career, it’s good. I get no pushback from students, some of whom are carefully watching the grading process. With eyes that would put a hawk to shame.

Encourage

Instructors encourage students to keep trying. How? Partly it’s a matter of course design, that is, what the author of the course does. For example, students’ beliefs about their ability to succeed is an important predictor of their effort. That’s one reason why complexity should increase slowly over many exercises. This is designed into the course, before the semester starts.

So what do I do in my instructor role? When a student I’m helping fixes something, I chant their name in a loud, theater-trained voice. “Katie! Katie! Katie!”

If a student says, “Oh, that was a stupid mistake I made,” that’s about the only time I’ll criticize them. I tell them that I’ve been programming for decades (yes, I’m old), and that I make mistakes often. It’s not stupid. It’s how human brains work.

I encourage students to help each other. If a student has a problem, sometimes I’ll ask another student who’s already worked it out to help, and leave them alone. Both students benefit.

All my courses have a fixed grading scale, set before the course starts. Students can help each other, without reducing their grade. Nobody can “blow the curve,” since there is no curve. This should reduce social pressure for high performers to limit their achievement, although I don’t have evidence for that.

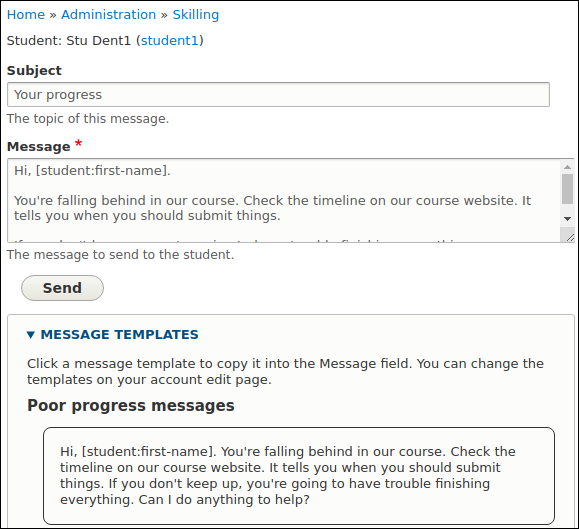

Monitor

Monitoring student progress is a joint responsibility of students and instructors. Each course has a calendar, with a suggested due date for each exercise. If there are 40 exercises, there are 40 due dates, so the calendar is quite detailed.

I make the due dates “soft,” suggested rather than mandated. Life happens. If a student gets a few days behind, that’s OK.

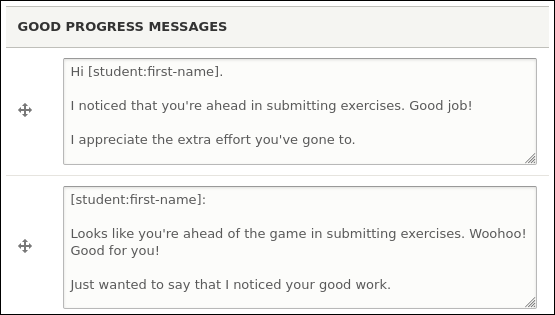

Instructors have monitoring tools. For example, there’s a report for instructors, showing the progress of every student. Instructors can click a button to send messages to individual students.

Notice the message templates. There are some default templates, but instructors can make their own. The templates make it easy to send messages, using language that’s been thought through beforehand.

Instructors are not limited to “Do you need help?” messages. Instructors can send messages to students who are working ahead, recognizing their extra effort. There are message templates for that, too.

Administer

There’s also administrative work. Creating classes, adding student accounts, adding grader accounts… yuck, but it has to be done. The best we can do is make workflows that aren’t painful.

Summary

SCDM courses have three elements: lessons, exercises, and relationships. Instructors set emotional tone, build rapport, troubleshoot, explain concepts, give (or arrange for) feedback, evaluate students for grades, encourage, and monitor. They might also do some administrative tasks, like making accounts for students. Students help each other.