Two things I want to talk about here: gathering data to guide improvement, and making courses easy to change.

Data to guide improvement

Skilling, and every LMS, gathers data on student behavior and performance. However, the best data I get is from working with students in class. When a student asks a question about something they don’t understand, that’s data on course effectiveness. Not that students’ errors are all the course’s “fault.” Still, each student’s misunderstanding is a clue to be investigated.

Skilling’s grading system gathers data on student performance, down to the rubric item level. That can be more than 10,000 (!) data points in a typical course of 50 students. Eventually, a set of data analysis programs will come with Skilling, to generate standard reports from exports of student history data. For example, a report might identify the rubric items with the most failures across all exercises.

The best exercises are challenging enough to push students to think, but not so difficult as to be demotivating. The “sweet spot” is called the zone of proximal development. How to estimate whether students are in the zone?

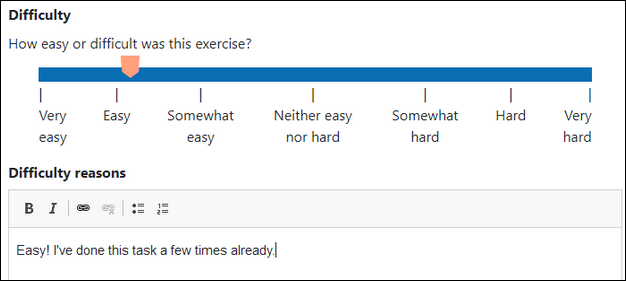

When students submit exercise solutions, they say how difficult the exercise was. Giving reasons is optional.

Preliminary data suggests that resubmissions are higher for exercises rated as more difficult, which makes sense. However, that’s a tentative finding at the moment.

Making courses easy to change

Many flipped courses use video lectures. It makes sense, if you’re used to lecture courses. Record lectures given in face-to-face courses, and you have what you need.

My courses are mostly text and images (and simulations, MCQs, FiBs, etc.). Video is reserved for two purposes. First, there are emotional appeals. Modules of my courses have short (less than 5 minute) videos, where I talk about why the content matters. The videos put a face on the course, especially for students I never meet.

I also use videos to show dynamic processes. For example, for an exercise where students write a D&D-like character generator, there’s a video showing how it should work.

Other than that, I stick to text and images, for several reasons.

- Content consumption is easier, since students control pacing. Students read as quickly or slowly as they want. It’s easy to go back and reread a paragraph, or a piece of code.

- Accessibility is easier.

- Seamlessly replacing content is easier.

The last is particularly important, especially if you use agile processes. Say I want to improve a worked example, perhaps insert an annotation. If the content was video, I’d have to record the new content with a microphone and screen capture, use an editor to insert the new content into the video, and replace the existing video on the course website. Further, unless I get the lighting and sound right, the new video will have jarring transitions.

Life is easier when the content is text and images. Edit a page on the website, type in the new content, paste in images, and save. Done! No continuity problems, either. It won’t be obvious that new content was patched in.

“But video helps learning!” Not necessarily. Video can help with some aspects of learning, and make other aspects more difficult. Check out one of Donald Clark’s blog posts for an into.

“But people like video!” Perhaps. It may be that most people are more willing to pay for a video course, than a text/image course. That’s a sales and marketing issue. SCDM is about learning.

Why not AI-powered automatic grading?

Skilling’s grading system relies on human judgement. It makes good use of graders’ time, automating everything it can, but at the center is a human grader. Why not use an AI-powered autograder?

First, they’re difficult to get right. You need someone to give grading rules, or train the software on thousands of examples for each exercise. When something is less than perfect, students will be complaining to instructors, looking for adjustments. Not a good way to keep a positive emotional tone.

Second, because they’re hard to make with high quality, autograders limit the flexibility that agile methods demand. With an autograder, you might be reluctant to change an exercise, or add new ones. Content updates, like moving a course to a new version of Excel, can be fraught with difficulty.

Third, autograders can’t recognize alternate solutions, or handle nuance. I’m happy when a student comes up with a new way to solve a problem. I compliment them on it. An autograder would reject their work. A general problem with AI software is that it doesn’t know when it doesn’t know, that is, when a task is outside it’s domain of competence.

With human graders, you can change rubrics, add and change exercises, whatever you want. Tell them what you’ve done, and you’re good to go.

Leave A Comment